Digital Epidemiology: Combining Big Data and Traditional Methods

DATA

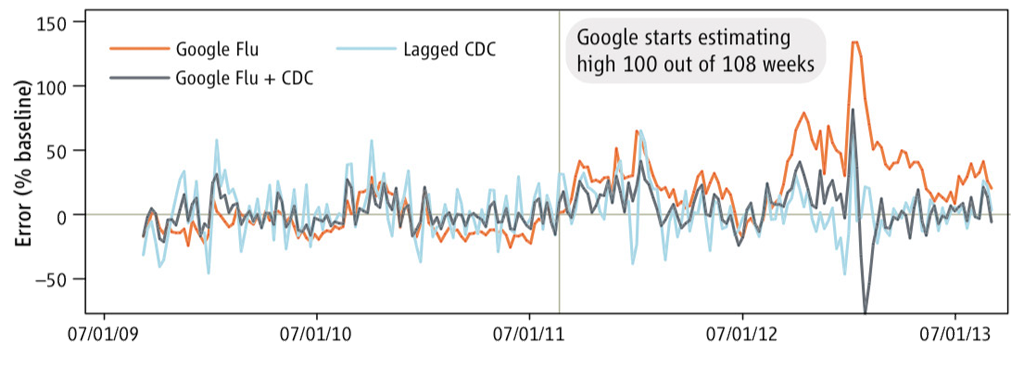

Researchers are using a combination of Google Flu Trends search data and Centers for Disease Control surveillance reports from hospital labs to estimate

IMPACT

Errors in predicting flu patterns between 2011-13 was considerably reduced by using Google searches and surveillance reports together

Photo: “Influenza Virus,” Sanofi Pasteur. Accessed from http://flic.kr/p/93W3ME. Creative Commons BY-NC-ND license available at https://creativecommons.org/licenses/by-nc-nd/2.0. No changes were made.

After failing to detect the H1N1 (swine flu) epidemic in 2009, over-reporting the 2012 flu season by 50%, and overestimating flu prevalence in 100 out of 108 weeks from 2011 – 2013, Google Flu Trends (GFT) announced plans in 2014 to partner with the Centers for Disease Control (CDC) to improve its predictions.1 2 One of the criticisms surrounding GFT is lack of transparency. By keeping the search algorithm and the relative weights attached to search terms hidden, it is impossible for outside researchers, tech experts, and health officials to help improve the predictive model.3

The Challenges of Search-Based Prediction

GFT miscalculated flu levels for several reasons. One is its autocomplete feature, which gives a list of search predictions based on the search behavior of nearby users, causing some people to click on suggestions for which they might not otherwise have searched. A second factor is widespread media coverage of an issue, which can cause a spike in searches on a topic, even if not directly relevant to the searcher.4 Google has tried to improve their algorithm to take these factors into consideration. A 2014 academic paper found that GFT is still overestimating flu prevalence about 74% of the time, although this is an improvement from 94% overestimation two years prior.5

Data, Big and Small

Traditional surveillance reporting continues to predict more accurately than GFT, but the most powerful tool is a combination of both. Mean absolute error is a common way of comparing forecasts with actually observed outcomes; a zero score indicates perfect precision. In 2011-13, the mean absolute error for GFT was 0.49, 0.31 for CDC reports, but only 0.23 for a combination of GFT and lagged CDC combined.6 Google and the CDC announced plans in 2014 to partner together to provide the most accurate information possible. This is a step in the right direction, but it is critical that Google search results also be presented separately from the CDC data so that the accuracy of signals from each independent source can be evaluated.7

How Digital Map Data Made a Difference

Bolstered by data-driven arguments for action, global attention to nutrition continues to grow, with more and more government leaders publicly committing to larger nutrition budgets. To meet the need, donor agencies are greatly increasing international aid disbursements for nutrition (see figure). The causes of malnutrition are complex, and definitively linking higher funding to impact is difficult. In many countries, however, progress is impressive. In India, for example, programs for nutrition are rapidly expanding and about 14 million fewer children are chronically undernourished now as compared to a decade ago.

Graphic: From reference [6] above. Used with permission.

This graph shows the lack of accuracy of Google Flu Trends versus three week-lagged CDC estimates. From 2009 to 2013, the most accurate predictor was actually a combination of GFT with the lagged CDC estimates, suggesting the need for more collaboration between government agencies and private companies. Source: Lazer et al. 2014

References

1. Fung, Kaiser. 2014. “Google Flu Trends’ Failure Shows Good Data > Big Data.” Harvard Business Review. March 25, 2014. https://hbr.org/2014/03/google-flu-trends-failure-shows-good-data-big-data/ (accessed May 8, 2015)

2. Marbury, Donna. 2014. “Google Flu Trends Collaborates With CDC for More Accurate Predictions.” Medical Economics, November 5, 2014. http://medicaleconomics.modernmedicine.com/medical-economics/news/google-flu-trends-collaborates-cdc-more-accurate-predictions (accessed May 8, 2015)

3. Lazer, David. 2015. Northeastern University. Interview by Cheney Wells. Phone interview. May 1, 2015.

4. Lazer 2015 (interview).

5. Lazer, David, Ryan Kennedy, Gary King, and Alessandro Vespignani. 2014. “Google Flu Trends Still Appears Sick: An Evaluation of the 2013‐2014 Flu Season.” March 13, 2014. http://dx.doi.org/10.2139/ssrn.2408560 (accessed May 10, 2015)

6. Lazer, David, Ryan Kennedy, Gary King, and Alessandro Vespignani. 2014. “The Parable of Google Flu: Traps in Big Data Analysis.” Science 343 (1676): 1203-1205.

7. Lazer 2015 (interview).